Today, companies are investing heavily in AI technologies, intelligent automation and modern ERP architectures. Despite this, many modernisation projects fail in the early stages because the quality of the underlying data is insufficient. Monolithic ERP systems have been collecting, expanding and changing data for years - often without clear rules or governance. This results in inconsistencies, Duplicates and gaps that slow down any form of AI-supported decision support. This is why a systematically structured Data quality the foundation for the entire modernisation process.

Why bad data slows down every AI

AI models recognise patterns, create forecasts and provide optimisation suggestions. However, they only work with the data they find. If this data is incomplete, contradictory or outdated, the AI produces results that are at best useless and at worst detrimental to business. Medium-sized companies in particular experience this very directly with sales forecasts, master data processes or automated Workflows.

A simple example makes the effects tangible: If there are several supplier entries in the ERP system with different spellings, addresses or payment terms, the AI does not see a standardised counterpart. Instead, it receives fragmented information. This results in incorrect order proposals, inaccurate liquidity calculations or incorrect analyses. The causes do not lie in the AI, but in the database.

That is why it is not enough just to New AI tools to be introduced. Companies must first ensure that the underlying data is consistent, up-to-date and unambiguous. Otherwise, AI will only exacerbate existing problems instead of creating added value.

Data problems in grown ERP landscapes

Most data problems do not arise in one fell swoop, but gradually. Different upstream systems, import processes and manual entries ensure that master data drifts apart over the years. In addition, rules are often applied informally but rarely documented in a binding manner. This makes it difficult to assess the data situation in day-to-day business.

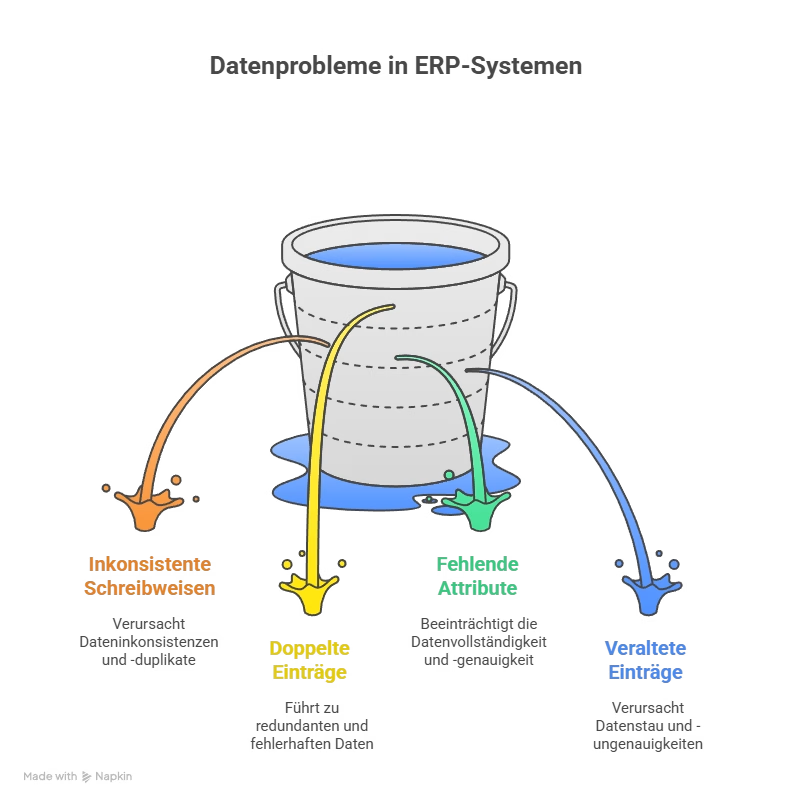

Typical effects are

- Different spellings for the same business partner

- Duplicates for articles, customers or suppliers

- Missing or incorrectly maintained attributes

- Outdated entries that have never been cleaned up or archived

These patterns run through many monolithic ERP installations. As a result, reports become inaccurate, workflows unstable and AI applications insecure. Systematic cleansing and integration of data is therefore one of the first levers for achieving improvements.

Data integration and cleansing: The first lever for improvement

This is precisely where data integration and cleansing come in. They create a consistent database from grown, heterogeneous inventories. Modern, AI-supported processes analyse structures, recognise deviating spellings and identify duplicates - often more reliably than manual checks. This allows large volumes of data to be consolidated in a manageable amount of time.

An illustrative example shows the effect: a company was faced with the task of revising around 800,000 article descriptions. Manual processing would have taken around seven years. With the help of generative AI, the task could be accelerated by 95 per cent. This resulted in a consistent article master base within six months, which was essential for further ERP modernisation steps.

This example illustrates that data cleansing is not just a technical issue. It has a direct economic impact because it strongly influences the project duration, error rate and usability of AI applications. Making targeted investments here reduces costs in all subsequent phases - from automation and analytics to Predictive maintenance scenarios.

Master data governance: structure instead of coincidence

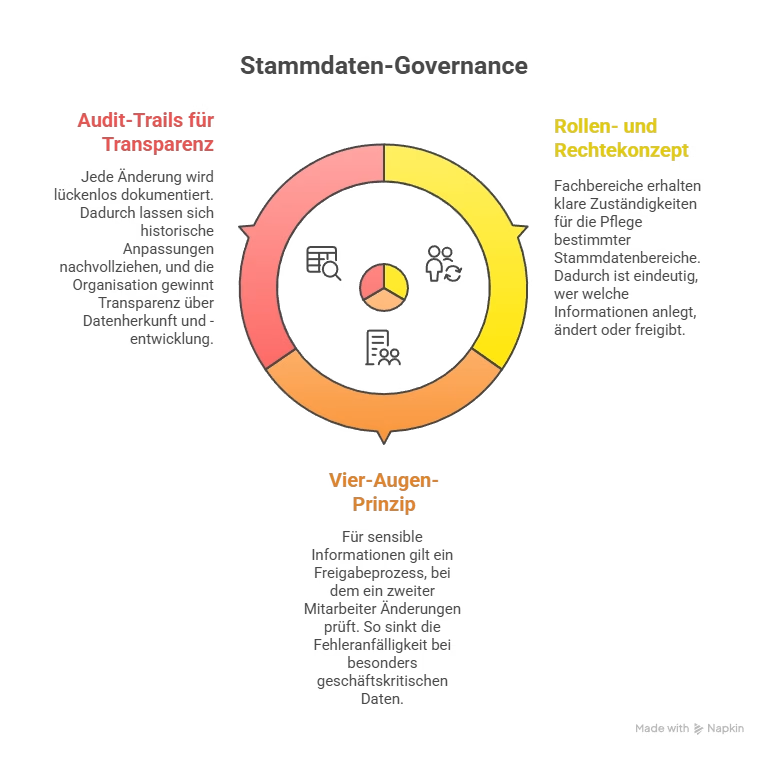

However, a one-off clean-up is not enough. Good data quality is only sustainable if clear governance structures are in place. Master data governance defines responsibilities, rights and processes so that data remains consistent in the long term. Companies should take three basic principles into account:

- Roles and rights concept

Specialist departments are given clear responsibilities for maintaining certain master data areas. This makes it clear who creates, changes or releases which information. - Four-eyes principle for critical changes

Sensitive information is subject to an approval process in which a second employee checks changes. This reduces the susceptibility to errors for particularly business-critical data. - Audit trails for transparency

Every change is fully documented. This allows historical adjustments to be traced and the organisation gains transparency regarding the origin and development of data.

These mechanisms prevent old habits from leading to new inconsistencies after modernisation. They also ensure that the system remains stable and that new AI or automation solutions can be based on a reliable database.

A comparison from practice helps to understand: if a company invests in a machine for years but neglects maintenance, the performance deteriorates despite modern technology. The same applies to ERP data - without governance, old errors automatically return.

Evaluation of historical data: What to keep, what to ignore?

Another critical point is the handling of historical data. Many companies assume that they have to transfer all historical data to new systems. This assumption leads to high costs, unnecessary complexity and longer project durations. In reality, only selected data sets are really valuable for AI or ERP-relevant processes.

Companies should therefore carry out systematic checks:

- Which data is currently being actively used?

- What information is business-critical or required by regulation?

- Which fonds can be archived or excluded?

In practice, only master data, open processes and relevant information from the last three to seven years are usually decisive. Older data can be archived without compromising modernisation. This results in leaner data migration projects, fewer sources of error and a significantly faster introduction of AI applications.

Why phase 2 is a key prerequisite for AI success

Data quality has an impact on all subsequent phases of ERP modernisation. Without a robust database, neither APIs can be used effectively nor automation processes implemented in a stable manner. In addition, LLM-based wizards, predictive models and process analyses only work reliably if the underlying information is correct and consistent.

Phase 2 therefore offers a double advantage:

- Technical: AI systems work more reliably and deliver reliable results because they are based on a cleansed and structured database.

- Organisational: Data maintenance processes are standardised and safeguarded through governance, resulting in long-term stability.

Companies that make targeted investments in this phase are not just creating a technical foundation. They develop data into a strategic resource. As a result, information becomes an active value driver of ERP modernisation instead of being perceived as a burden or risk.

Generative AI in ERP: How LLMs are changing the role of ERP systems

Preparing the ERP future with APIs and microservices

Data quality & AI : AI can only be as good as your data

AI-ERP transformation basics and AI governance

AI in the company: 4 myths about the GDPR