With the advent of generative KI and large language models (Large Language Models, LLMs), the role of ERP systems is changing fundamentally. Instead of just booking transactions, storing data and delivering reports, they are developing into interactive, learning systems. As a result, they support human decisions, accelerate processes and can even replace parts of them.

In addition, the integration of such technologies into traditional ERP-landscapes represent a real turning point. However, it is crucial that this change takes place strategically and not opportunistically or purely experimentally. LLMs such as GPT or Claude act as „copilots“ that complement existing systems rather than supplanting them.

From transaction system to interactive partner

Rule-based ERP systems vs. adaptive language models

Traditional ERP systems are rule-based and largely deterministic. They carry out what they are explicitly told to do - no more and no less. This is why they function stably, but they only react within clearly defined limits.

Generative AI, on the other hand, is contextualised, probabilistic and adaptive. It interprets inputs in context, recognises patterns and generates content that goes beyond traditional if-then logic. This opens up new forms of interaction with the ERP that go beyond masks, transactions and static reports.

Central fields of application of generative AI in the ERP context

This different mode of operation gives rise to new fields of application in the ERP environment:

- Automated text generation, for example for offers or product descriptions

- Intelligent user guidance directly in the system

- process analysis and pattern recognition in processes and data

- Assistance with requirements and code creation

- Real-time support for operational and technical decisions

These functions can also be integrated into existing structures without replacing the core system. LLMs thus accompany users and developers like a co-pilot along central ERP processes.

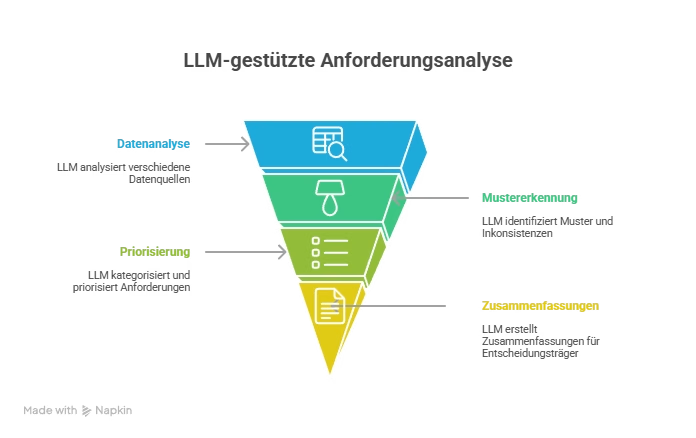

Automate requirements analysis with LLMs

In classic ERP projects The requirements analysis consumes a lot of time and resources. Interviews, workshops, protocols, documentation and coordination are usually carried out manually. This often results in a disconnect between the specialist language and technical implementation.

An LLM can significantly accelerate and structure this phase. It analyses meeting minutes, system data and departmental documents in great detail. It also recognises patterns, duplicate requirements and inconsistencies that are easily overlooked in the manual view.

In addition, an LLM automatically categorises and prioritises requirements according to predefined criteria. It creates summaries for decision-makers, who quickly gain an overview of conflicts, dependencies and gaps. The result is a time saving of around 30-60 % in the analysis phase - without any loss of detail.

This leaves more time for professional evaluation and the conscious design of processes, while routine structuring work is shifted to the AI.

Understanding legacy code and generating new code

Large ERP systems such as SAP often contain tens of thousands of lines ABAP-code. This has grown historically, been expanded several times and is often inadequately documented. This results in veritable „code deserts“ that are difficult to understand, even for experienced developers.

Generative AI helps to structure and decipher these landscapes. It analyses existing Customization-objects and source codes. It can also comment on outdated logic and make refactoring suggestions. This makes it possible to see what code sections can do technically and where there are redundancies.

At the same time, an LLM supports the creation of unit tests by suggesting sensible test cases based on existing functions. It generates new code on the basis of function descriptions, which developers then check and adapt.

LLMs explicitly act as technical co-developers here. They do not blindly produce code, but support human developers with suggestions, explanations and pattern recognition. As a result, development times are reduced by up to 40 %, while knowledge about the system is better documented.

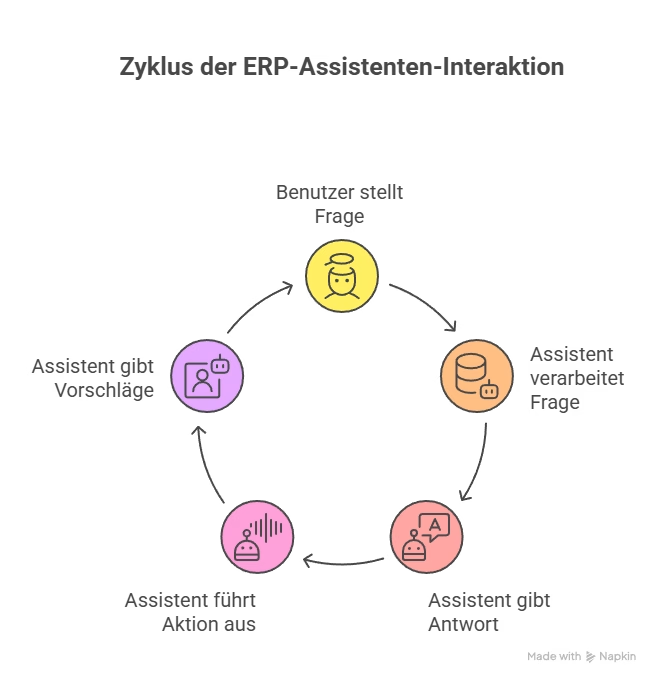

Intelligent user guidance through AI-supported assistants

A digital ERP assistant based on an LLM - in the form of a chatbot, for example - can interact directly with users. Instead of searching for menu paths or reading manuals, users formulate their requests in natural language.

The assistant answers context-sensitive questions and accesses ERP data and process logic. It can also perform simple actions directly, for example: „Show all outstanding invoices from customer X“. This significantly reduces the navigation effort in the system.

The assistant also makes suggestions based on past usage patterns. It guides users through processes without the need for extensive training. This creates a noticeable benefit, especially for occasional users who rarely work in ERP: They quickly receive the information they need without having to know the system depth in detail.

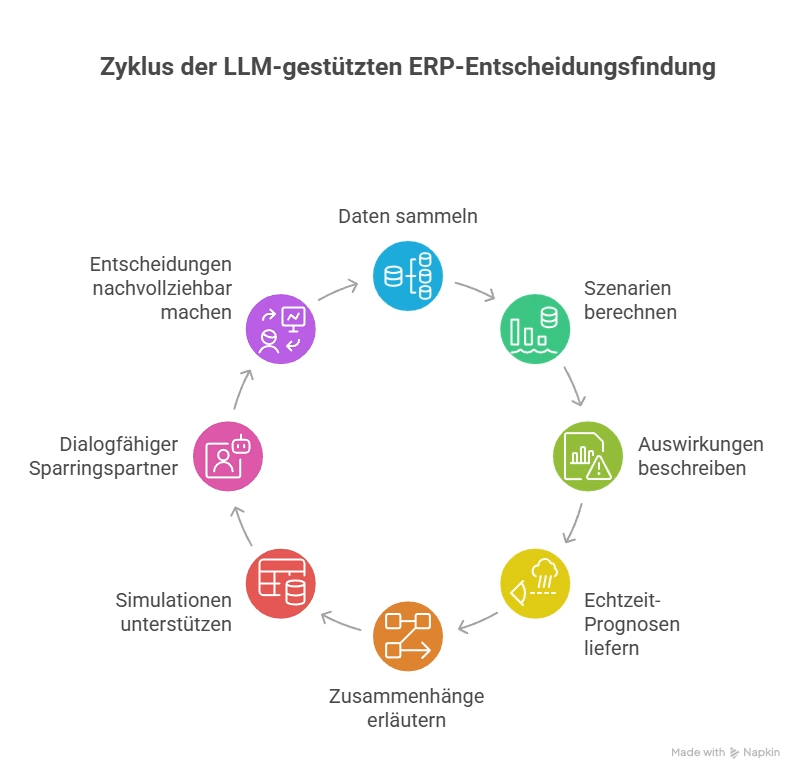

Forecasting, scenario analysis and decision support

LLMs can be combined with classic AI technologies to support decisions in the ERP environment. While traditional models forecast figures, the LLM provides a dialogue-capable interface and contextual understanding.

This enables what-if analyses that can be formulated in natural language: „How will my stock level change with a 10 % increase in demand?“ The system accesses current data, calculates scenarios and describes the effects.

Real-time forecasts on sales trends are also available, Liquidity planning or delivery times. AI not only provides figures, but also explains correlations. At the same time, it supports simulations of planning scenarios based on current data models.

As a result, AI no longer acts as a static evaluation, but as a sparring partner capable of dialogue. It understands the context, asks questions and proactively offers alternatives. This makes decisions more comprehensible because the path to the recommendation remains transparent.

Prerequisites for the successful use of generative AI in ERP

Despite its potential, a number of prerequisites must be met for generative AI to be used safely and effectively in the ERP environment.

Firstly, LLMs require structured and controlled data access. They only work as well as process data, Product master data or interaction logs are maintained and accessible. Clear security and authorisation concepts are also required. Sensitive ERP data must not be processed in an uncontrolled manner, which is why role-based access concepts and technical guard rails are required.

Transparency is crucial, especially in the financial sector. It must therefore be possible to understand how AI proposals are made and what data they are based on. Only then can decisions be documented in an auditable manner.

At the same time, the human being remains in the process in the sense of a human-in-the-loop approach. LLMs should make recommendations, but decisions must remain validatable and correctable. This creates an interplay between automation and conscious control.

ERP + LLM: synergy instead of system replacement

The aim is not to replace ERP systems with LLMs. Rather, their boundaries should be extended. Traditional systems are structured, precise and regular. LLMs complement these strengths with flexibility, language understanding and context intelligence.

The combination creates a hybrid system:

- the ERP ensures transactional precision

- the LLM provides interactive intelligence

The added value is created in the intersection. This can be seen, for example, in the interaction with users, in the analysis unstructured data or in the automated derivation of recommendations for action from existing information. As a result, ERP remains the stable backbone of the company's processes, while LLM forms the bridge between people, data and decisions.

Outlook: Generative AI as an extension of the digital workplace

In future, ERP systems will have to do more than just book, save and report. They should actively make suggestions, take on tasks and adapt to the user. Generative AI and LLMs are the key to this expansion of the digital workplace.

However, success depends on how controlled, targeted and governance-compliant these technologies are used. Who data management, If you take security and transparency seriously, you can integrate LLMs into ERP landscapes in such a way that pure transaction machines become genuine interactive partners in day-to-day business.

Generative AI in ERP: How LLMs are changing the role of ERP systems

Preparing the ERP future with APIs and microservices

Data quality & AI : AI can only be as good as your data

AI-ERP transformation basics and AI governance

AI in the company: 4 myths about the GDPR